I work at Xiaomi AI lab as a senior engineer now, developing the Next-gen Kaldi under the leadership of Daniel Povey. Our team focus on the advanced and efficient open source E2E Automatic Speech Recognition. If you are interested in our Next-gen Kaldi project and want to know more about it, feel free to email me at wkang@pku.edu.cn.

I graduated from Peking University with a master’s degree majoring in Technology of Computer Application, advised by Tengjiao Wang(王腾蛟). I got my bachelor’s degree from School of Electronic Science and Engineering, Nanjing University.

I used to work for China Highway Engineering Consultants Corporation (CHECC) as a Big Data Engineer. At the spring of 2019, I changed my direction to Automatic Speech Recognition. Thanks to Mobvoi, it gave me a chance to start my ASR research as a layman. I worked there for more than 2 years focusing on intelligent cockpit. After that, at the summer of 2021, I joined Daniel’s team.

🔥 News

- 2025.02: 🎉🎉 CR-CTC pdf is accepted by ICLR 2025.

- 2024.01: 🎉🎉 Zipformer pdf is accepted by ICLR 2024.

- 2023.12: 🎉🎉 Two papers: Libriheavy pdf and PromptAsr pdf are accepted by ICASSP 2024.

- 2023.05: 🎉🎉 We release a new version (stable version) of Zipformer code

- 2023.05: 🎉🎉 Two papers: Blank skipping for transducer pdf and delay-penalized CTC pdf are accepted by Interspeech 2023.

- 2023.02: 🎉🎉 Three papers: Fast decoding for transducer pdf, MVQ training pdf, Delay-penalized transducer pdf are accepted by ICASSP 2023.

- 2022.11: 🎉🎉 We release the first version of Zipformer code.

- 2022.06: 🎉🎉 We start the sherpa, sherpa-onnx, sherpa-ncnn projects, it is a ASR runtime based on libtorch.

- 2022.06: 🎉🎉 Pruned rnnt paper is accepted by InterSpeech 2022 pdf.

- 2022.05: 🎉🎉 We finish a reworked version of conformer, which converages faster and can train with fp16 stably, the performance is also slightly better code.

- 2022.03: 🎉🎉 We finish our pruned rnnt loss (also in k2), which is much faster and memory efficient than RNNTLoss in torchaudio.

- 2021.12: 🎉🎉 We decide to change our direction from CTC/MMI to RNN-T like models, because it is more suitable for efficient streaming ASR.

- 2021.09: 🎉🎉 We release the first version of Icefall at the InterSpeech 2021.

- 2021.06: 🎉🎉 I join Daniel’s team at Xiaomi.

🚀 Projects

k2: The core algorithm of the Next-gen Kaldi

- Ragged Tensor running on both CPU and GPU.

- Differentiable Finite State Acceptor.

- Pruned RNN-T Loss.

Icefall: The recipes of the Next-gen Kaldi

- Conformer CTC / MMI.

- Xformer transducer.

- MVQ training.

- Pipeline to build an ASR.

📝 Publications

Pruned RNN-T for fast, memory-efficient ASR training

Fangjun Kuang, Liyong Guo, Wei Kang, Long Lin, Mingshuang Luo, Zengwei Yao, Daniel Povey

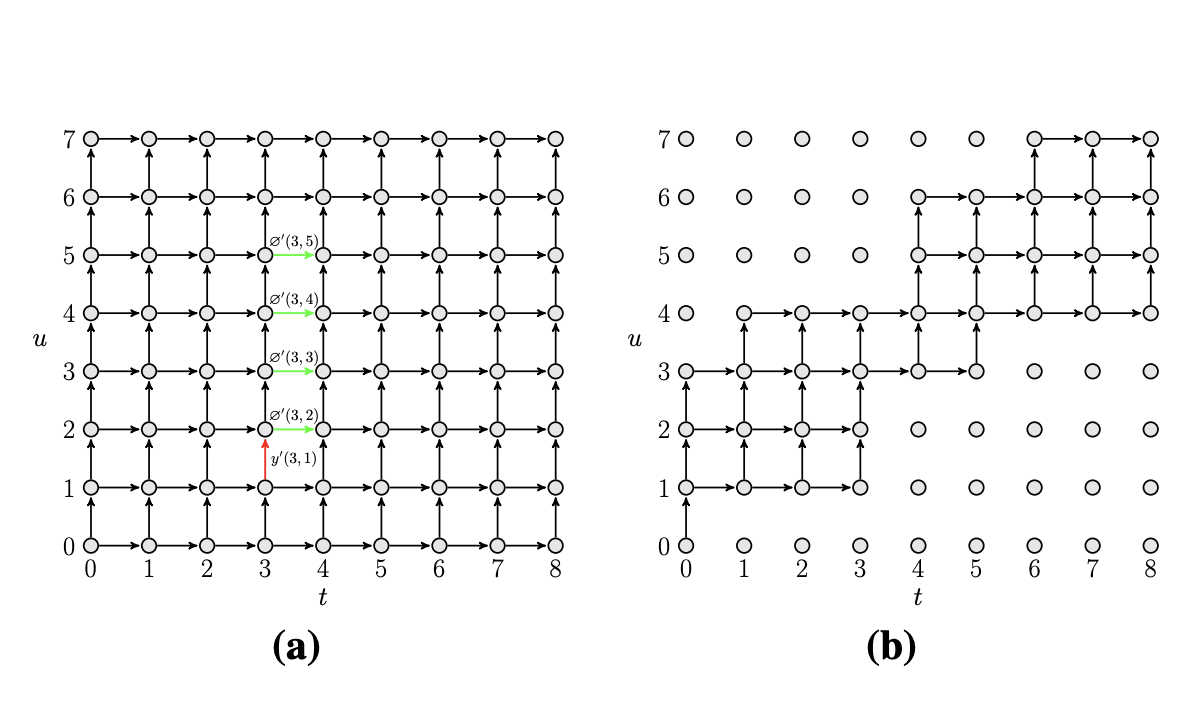

- We introduce a method for faster and more memoryefficient RNN-T loss computation.

- We first obtain pruning bounds for the RNN-T recursion using a simple joiner network that is linear in the encoder and decoder embeddings.

- We then use those pruning bounds to evaluate the full, non-linear joiner network.

📖 Educations

- 2014.09 - 2017.06 (Master), Computer Science (CS), Peking University (PKU).

- Technology of Computer Application, Institute of Network Computing and Information Systems (NC&IS)

- 2010.09 - 2014.06 (Bachelor), Electronics Engineering (EE), Nanjing University (NJU).

- Information Electronic, School of Electronic Science and Engineering

- 2007.09 - 2010.06, Changting No.1 Middle School, Fujian Province.

💬 Invited Talks

- 2022.07, I give a presentation about the Next-gen Kaldi. | [video]

💻 Work Experiences

- 2021.06 - Now, Xiaomi Corporation, Senior Engineer.

- 2019.01 - 2021.05, Mobvoi Beijing, Speech Engineer.

- 2017.07 - 2018.12, China Highway Engineering Consultants Corporation (CHECC), Big Data Engineer.

- 2016.03 - 2016.12 (Internship), Mobvoi Beijing, Search Engineer.